TL;DR:

- The first-ever Handmade Network Expo will take place on June 6, 2026

- Join us in re-watching Handmade Hero! (starting February 7, 2026)

- A few thoughts about Handmade's position in the industry

We are very excited to announce the Handmade Network’s first-ever in-person event: The Handmade Network Expo!

2025 was a quiet year for us where we focused on the fundamentals of the community: jams, projects, a healthy community, and interesting discussions. But we love seeing other people in person, and were sad that we didn’t have the opportunity last year. So we decided it was time to bring people together again, and with our renewed focus on real, tangible Handmade software, a day of demos seems like the perfect fit.

So: Join us in Vancouver, BC on June 6, 2026, for a day packed with demos, discussion, socializing, and celebrating the achievements of the talented programmers of the Handmade community!

(By the way, don't miss more news at the bottom. It's been a busy month!)

Is this a conference?

Nope. There will be no formal talks, nor any keynote speakers. It won’t be livestreamed. Instead it’s absolutely all about the software.

The goal is to pack the day full of demos, Q&As, discussion, and collaboration. We don’t want you sitting in one place all day; we want you socializing with others, asking questions, showing your own work, and being inspired. We want an atmosphere where, between scheduled demos, people can brainstorm around a whiteboard, pass around a laptop, or hook up to a projector for an ad-hoc presentation. In other words, we want an event defined as much by its attendees as by the formal schedule.

What’s the venue?

The Expo will be hosted at Global Relay’s beautiful event space in downtown Vancouver. It’s the perfect space for what we have in mind: a spacious floor plan we can adapt to our needs, beautiful views of the city, and WHITEBOARDS.

Who’s organizing?

All the organizing work has been done by community member Matthew Currie (AbjMakesAPizza), who’s been running meetups in Vancouver since May 2024. He’s a game developer and Vancouver native, and has done an amazing job navigating our vague ideas for an in-person Handmade Network event. We’ve also had guidance from Demetri Spanos throughout the planning process, and his feedback has been invaluable in shaping the vision for the event.

How much will it cost to attend?

We are still finalizing the details, but we expect ticket prices to be around $100 USD. We’re doing our best to keep costs low, and we’re not seeking to make a profit from this. If there are excess proceeds, they will be given to the Handmade Software Foundation so we can later deploy them in service of Handmade software.

As for travel, we’re currently looking into hotel and airline discounts, but have nothing to announce at this time. We know travel can be expensive, but because Vancouver is such a major travel hub, we hope it will be affordable nonetheless. Stay tuned for more info on this.

Tickets available soon!

We’ll have a dedicated page for the event soon where you can buy your tickets and get more info as the event approaches. Make sure you’re subscribed to our newsletter below to be notified when tickets go on sale!

I'm really looking forward to seeing people in person again. It always means a lot to meet talented people who are similarly inspired to make Handmade software, and I hope it will be inspiring to all of you as well.

Want to go through Handmade Hero again?

There has recently been a surge of people who are interested in going through Handmade Hero. It's heartwarming to see so many people still excited about it, almost 12 years since the first episode aired. So we figured: why not organize that? Why not go through it together?

So, starting this Saturday (February 7) we are starting Handmade Hero Replay, an informal group on the Discord who will be going through Handmade Hero together. The pace is roughly two episodes per week, and every Saturday at coffee time (~12pm central time) there will be a voice meeting to recap the week's episodes, led by long-standing community member BYP.

If you'd like to participate, join the Discord and express interest in the #handmade-hero-replay channel. I'm looking forward to it!

A few thoughts about the state of software

Before I close, I just wanted to share a few of my thoughts about the community's position in the software industry, and the direction I'm hoping for us to take.

I know everyone is sick of hearing about AI. I don't really want to dwell on it. But the good news is, I don't really have to, because I've been here before.

Handmade Hero started during the heyday of npm and Stack Overflow. Thought Leaders across the industry were preaching that you should never reinvent the wheel, because someone smarter than you had already made a library for that. Anything you wrote yourself was a liability, because you were stupid and had no time to maintain anything anyway. Your goal as a programmer was to write as little code as possible.

This should sound familiar.

The thing is, despite all the noise, nothing has changed about how software should be written. Most software is insanely overengineered by people who do not know what they're doing, and programmers who do know what they're doing can run circles around the rest of the industry. People are worried about AI replacing all programmers, but then programs like File Pilot remind us that, actually, computers are fast and software can be good.

Actual Handmade programming has always been, and always will be, the lifeblood and anchor of the community. For over a decade, it has served as our north star. No matter what people say about the future of software, we can point at Handmade software and say "if one programmer can do it 100x faster, why can't you?"

So keep building, and keep learning from each other, and keep setting an example. The world needs people who know, and love, what they're doing.

-Ben

finished my gamejam project, a homebrew game for the sega dreamcast

https://novicearts.itch.io/elysian-shadows-lost-in-transmission

This one was a doozy from a programming perspective. I implemented alot of RPG mechanics from the groundup (enemy AI, items, map loading, etc etc). Ended up feeling like I was coding a zelda game

A new article: "Choosing a Language Based on its Syntax?"

https://www.gingerbill.org/article/2026/02/19/choosing-a-language-based-on-syntax/

(oops, reposting because used wrong channel for previous message)

Single-file C header for doing 128-bit math operations for msvc/clang/gcc.

Includes full 128-bit signed & unsigned division, handling 128-bit quotient and remainder values.

Compiles to mostly branchless code with msvc & clang.

https://github.com/mmozeiko/overflow/blob/main/x128/x128.h

if you want 32-bit or 64-bit int to hex string in simd (sse2, ssse3, avx512, neon, wasm and rvv) or just in plain C swar, no loops: https://gist.github.com/mmozeiko/68c2d1ce466422b506b2c86e4f603f53

Added Replace colors function to make it easier to try different color pallettes. Idea from @thebmxeur

Added Hotkeys to my pixel art app. You can bind different keys to actions in Settings.

You can get the latest release here: https://makinggamesinc.itch.io/spixl-pixel-art-editor

I published a text version of a talk I gave at Handmade Seattle a couple years ago, which is sort of a synthesis of why I think the software industry should care about low level programming, and how I think the Handmade ethos relates to "low level" in the big picture: https://bvisness.me/high-level/

(I have also uploaded the original talk to my YouTube channel.)

Added the brush shape outline to my pixel art editor. You can download the latest version here: https://makinggamesinc.itch.io/spixl-pixel-art-editor

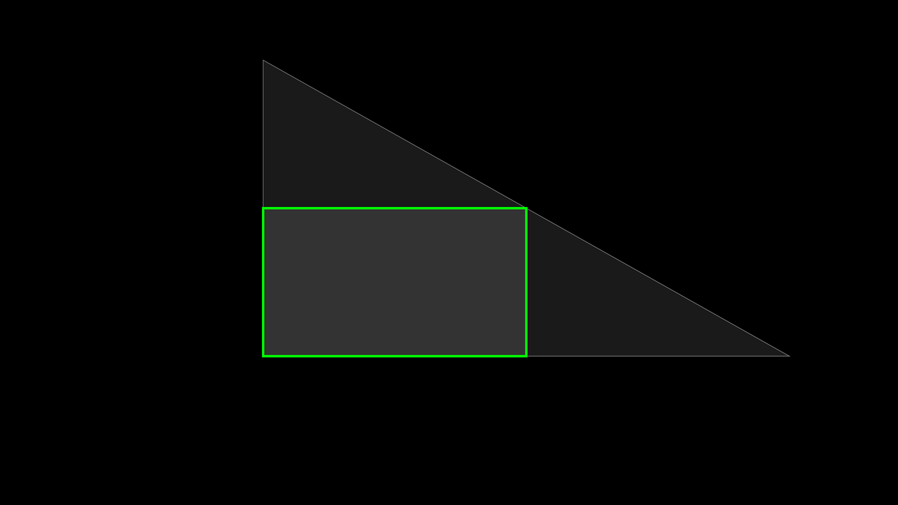

Minimal D3D11 bonus material: fullscreen triangle

Nothing major, but since I was already revisiting it.. a cleaned up version of an older, private gist; basic setup for rendering a fullscreen triangle (as opposed to a fullscreen quad)

CactusViewer now detects and matches the sort order from @vjekoslav's awesome FilePilot in addition to Windows Explorer. (can be toggled in settings)

also @orcenhaze has implemented a fantastic feature I have been wanting to add since the beginning: drawing on images! The edits can be exported to clipboard or saved to disk through the same editing pipeline.

also this new release adds a ton of stability fixes that has caused Cactus to crash before. To help expediate this there is also now a very verbose log file in %APPDATA%\CactusViewer\debug.log you can check if things are wrong or if you experience any crash, feel free to DM me when that happens!

get the release and see complete release notes here:

https://github.com/Wassimulator/CactusViewer/releases/tag/v2.3.0

With help from the Handmade community I created a first &radiotron alpha release.

You can download it for either MacOS or Windows.

Windows

MacOS

The MacOS release works on both Intel and Apple Silicon. Note, though, that neither is code-signed at this point so you will get warnings when trying to run them. I can also build Radiotron on Linux, but haven't put the time yet to create something like an AppImage. Let me know if you'd like to try Radiotron on Linux specifically.

Any feedback is welcome!

More info about Radiotron at http://www.nicktasios.nl/radiotron

I was wondering if anyone could see if this release runs on their windows computer? It was build with mingw on mac. It probably won't 😅 Any help is appreciated:

https://github.com/Olster1/pixelr/releases/tag/1.0.0

new blog post about internals and challenges faced by my time travel debugger &blindspot with some comparisons to rr and WinDbg

https://handmade.network/p/675/blindspot-debugger/blog/p/9099-how_to_record__replay_native_programs

finished my gamejam project, a homebrew game for the sega dreamcast

https://novicearts.itch.io/elysian-shadows-lost-in-transmission

This one was a doozy from a programming perspective. I implemented alot of RPG mechanics from the groundup (enemy AI, items, map loading, etc etc). Ended up feeling like I was coding a zelda game

Hosting a website from home using no operating system using a networking stack I wrote from scratch and a RMII/MDIO driver I also wrote from scratch. http://gsan.whittileaks.com. Its HTTP only so make sure your browser does not automatically add https:// in front. Come break it 🙂

Full post here https://handmade.network/forums/wip/t/9116-lneto_-_heapless_userspace_networking_stack_in_go

Working on a 3D game + tiny-game-engine from grounds up. No godot / no unreal / no unity. Thanks to Casey Muratori / handmade hero to get me started and an invaluable resource for gamedev. It was a supposedly insane idea at the time to build engine as well and at times I feel it still is.

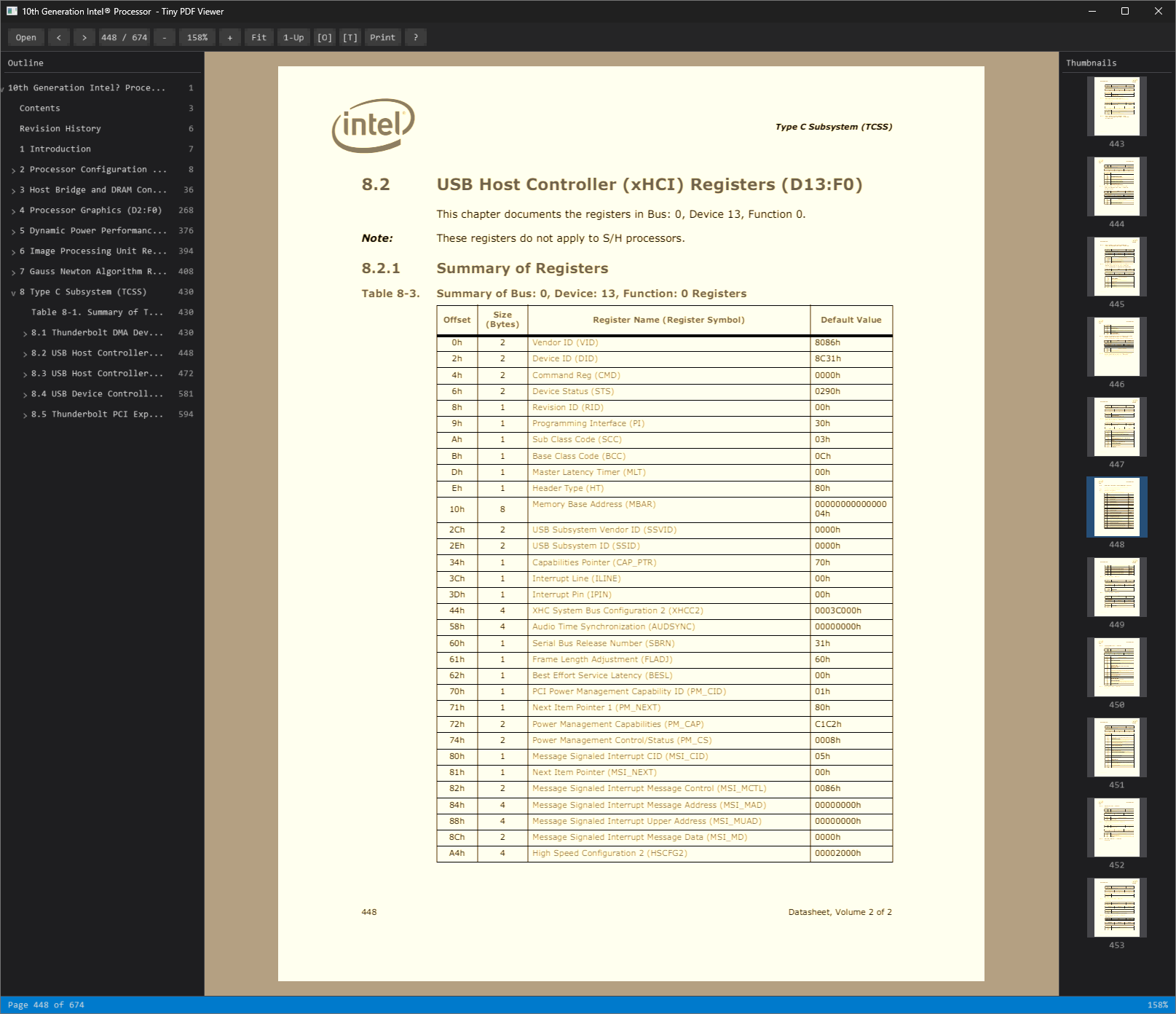

Building TinyPDFViewer which a pdf viewer should do and Adobe never did - let me read PDF in peace (no fancy login / features) and yet protect my eyes. Its around 5.8 MB total (5.5 MB is pdfium DLL itself and my app is around 340 KB). It has reading mode which finally works, supports quick shortcuts I needed and its blazingly fast with low memory footprint. I have been slowly, but steadily over the past year building tiny apps replacing typical software on windows.

Working on a C++ / DirectX12 notepad as fun / side-project (started after MS made notepad do stuff its not supposed to do like auto-sign-in). Its around 2MB with static linking (no vcruntime dynamic linking). zoom, multi-lingual, custom UI (my custom from scratch UI on top of directx12), lines, split down / up, copy/paste. UTF-8 support isn't perfect although it does display stuff but editing isnt perfect.

Vision: No bloat / no plugin / multi-lingual / simple text editing with fast startup and core editing features.

A new article: "Choosing a Language Based on its Syntax?"

https://www.gingerbill.org/article/2026/02/19/choosing-a-language-based-on-syntax/

(oops, reposting because used wrong channel for previous message)

Single-file C header for doing 128-bit math operations for msvc/clang/gcc.

Includes full 128-bit signed & unsigned division, handling 128-bit quotient and remainder values.

Compiles to mostly branchless code with msvc & clang.

https://github.com/mmozeiko/overflow/blob/main/x128/x128.h

if you want 32-bit or 64-bit int to hex string in simd (sse2, ssse3, avx512, neon, wasm and rvv) or just in plain C swar, no loops: https://gist.github.com/mmozeiko/68c2d1ce466422b506b2c86e4f603f53

I've been playing around with giving the &adaw waveform edit menu it's own timeline view. I liked the lower-frequency wave that I was drawing in previous versions because It's not so noisy, but I don't know If actually I want to display inaccurate visualizations. Zooming in on the wave is my compromise for now. There's definitely more work to be done on the wave drawing front though. I really like Audacity's LODs as you zoom in; I'm probably going to replicate that

PBR shading and textures with diffuse, roughness, AO and normal maps.

Also made the lighting from the torch as a proper point light with a position around the player, and then gave it some breathing movement and a more appropriate lighting color.

Finally got around to improving the lighting, with a more appropriate and dramatic torch lighting. Before it was constant light intensity per scanline (horizontal for walls/ceiling and vertical for walls). Now is more appropriate spherical attenuation around the player position (as it was in the original Odin project way back then).

This makes it look more like an actual 3D ray casting/tracing rendering, as the lighting behaves as though there were camera-correct ray directions per-pixel.

Also then added some flame-like light flickering to get more of the feeling of walking around with a tourch.

Wanted to see if it would be possible to free the camera to look up and down, while still keeping it only pseudo-3D and still casting only a single horizontal line of rays across the screen. Of course it wasn't initially, because the vertical symmetry was heavily relied on - only the top half of the screen being computated with the bottom half using mirrored results. But ultimately, I've found that it is absolutely still possible (albeit not necessarilly optically correct though still looks reasonably plausible), by computing where the horizon line should go when looking up or down, and computing the full height of the screen with above and below the horizon line computed with respect to where it goes. Performance dropped a bit, but not by that much really, all things considered. CUDA kernel now uses the full screen grid though, as opposed to the half that it previousely used to.

TL;DR:

- The first-ever Handmade Network Expo will take place on June 6, 2026

- Join us in re-watching Handmade Hero! (starting February 7, 2026)

- A few thoughts about Handmade's position in the industry

We are very excited to announce the Handmade Network’s first-ever in-person event: The Handmade Network Expo!

2025 was a quiet year for us where we focused on the fundamentals of the community: jams, projects, a healthy community, and interesting discussions. But we love seeing other people in person, and were sad that we didn’t have the opportunity last year. So we decided it was time to bring people together again, and with our renewed focus on real, tangible Handmade software, a day of demos seems like the perfect fit.

So: Join us in Vancouver, BC on June 6, 2026, for a day packed with demos, discussion, socializing, and celebrating the achievements of the talented programmers of the Handmade community!

(By the way, don't miss more news at the bottom. It's been a busy month!)

Is this a conference?

Nope. There will be no formal talks, nor any keynote speakers. It won’t be livestreamed. Instead it’s absolutely all about the software.

The goal is to pack the day full of demos, Q&As, discussion, and collaboration. We don’t want you sitting in one place all day; we want you socializing with others, asking questions, showing your own work, and being inspired. We want an atmosphere where, between scheduled demos, people can brainstorm around a whiteboard, pass around a laptop, or hook up to a projector for an ad-hoc presentation. In other words, we want an event defined as much by its attendees as by the formal schedule.

What’s the venue?

The Expo will be hosted at Global Relay’s beautiful event space in downtown Vancouver. It’s the perfect space for what we have in mind: a spacious floor plan we can adapt to our needs, beautiful views of the city, and WHITEBOARDS.

Who’s organizing?

All the organizing work has been done by community member Matthew Currie (AbjMakesAPizza), who’s been running meetups in Vancouver since May 2024. He’s a game developer and Vancouver native, and has done an amazing job navigating our vague ideas for an in-person Handmade Network event. We’ve also had guidance from Demetri Spanos throughout the planning process, and his feedback has been invaluable in shaping the vision for the event.

How much will it cost to attend?

We are still finalizing the details, but we expect ticket prices to be around $100 USD. We’re doing our best to keep costs low, and we’re not seeking to make a profit from this. If there are excess proceeds, they will be given to the Handmade Software Foundation so we can later deploy them in service of Handmade software.

As for travel, we’re currently looking into hotel and airline discounts, but have nothing to announce at this time. We know travel can be expensive, but because Vancouver is such a major travel hub, we hope it will be affordable nonetheless. Stay tuned for more info on this.

Tickets available soon!

We’ll have a dedicated page for the event soon where you can buy your tickets and get more info as the event approaches. Make sure you’re subscribed to our newsletter below to be notified when tickets go on sale!

I'm really looking forward to seeing people in person again. It always means a lot to meet talented people who are similarly inspired to make Handmade software, and I hope it will be inspiring to all of you as well.

Want to go through Handmade Hero again?

There has recently been a surge of people who are interested in going through Handmade Hero. It's heartwarming to see so many people still excited about it, almost 12 years since the first episode aired. So we figured: why not organize that? Why not go through it together?

So, starting this Saturday (February 7) we are starting Handmade Hero Replay, an informal group on the Discord who will be going through Handmade Hero together. The pace is roughly two episodes per week, and every Saturday at coffee time (~12pm central time) there will be a voice meeting to recap the week's episodes, led by long-standing community member BYP.

If you'd like to participate, join the Discord and express interest in the #handmade-hero-replay channel. I'm looking forward to it!

A few thoughts about the state of software

Before I close, I just wanted to share a few of my thoughts about the community's position in the software industry, and the direction I'm hoping for us to take.

I know everyone is sick of hearing about AI. I don't really want to dwell on it. But the good news is, I don't really have to, because I've been here before.

Handmade Hero started during the heyday of npm and Stack Overflow. Thought Leaders across the industry were preaching that you should never reinvent the wheel, because someone smarter than you had already made a library for that. Anything you wrote yourself was a liability, because you were stupid and had no time to maintain anything anyway. Your goal as a programmer was to write as little code as possible.

This should sound familiar.

The thing is, despite all the noise, nothing has changed about how software should be written. Most software is insanely overengineered by people who do not know what they're doing, and programmers who do know what they're doing can run circles around the rest of the industry. People are worried about AI replacing all programmers, but then programs like File Pilot remind us that, actually, computers are fast and software can be good.

Actual Handmade programming has always been, and always will be, the lifeblood and anchor of the community. For over a decade, it has served as our north star. No matter what people say about the future of software, we can point at Handmade software and say "if one programmer can do it 100x faster, why can't you?"

So keep building, and keep learning from each other, and keep setting an example. The world needs people who know, and love, what they're doing.

-Ben

Hello Handmade Network, and happy new year! 2025 was a relatively quiet year for us where we focused on just running our jams and building relationships across the Handmade community. But we’ve got lots of plans for 2026 and we’ve already been at work kicking them off!

TL;DR:

- We are finally launching the Handmade Software Foundation and using it to support the development of more Handmade software

- We’re planning an in-person gathering for this spring, more details forthcoming

- We’ll be doing two jams as usual, dates TBD based on other events

Launching the Handmade Software Foundation

A few years ago we announced that we were creating a nonprofit to support the development of Handmade software. Well, it took some time, but I am pleased to announce that the Handmade Software Foundation is now officially a 501(c)(6) nonprofit corporation.

What does this mean? It means we are a nonprofit under the category carved out for business leagues, chambers of commerce, etc. In other words, we have a nonprofit category that allows us to work towards improving business conditions in the software industry. This is perfect for us, because the software industry desperately needs fixing, and by supporting Handmade software we can make more programmers aware of how powerful computers are, while also providing direct benefit to Foundation members. And the nonprofit status exempts us from income tax, meaning that 100% of donations will go directly to the nonprofit, not the government.

The 501(c)(6) differs from the more familiar 501(c)(3) designation in that we are not a charity. The 501(c)(3) is explicitly designed for charitable organizations, and confers the additional benefit of donations being tax-deductible. Over time, though, the definition of a 501(c)(3) has become extremely distorted, especially in the software space, since companies were able to convince the IRS that making open-source software is a charitable/scientific activity. The result is that large companies were able to fund their own development by creating a “charity”, open-sourcing some of their core technology, and then building their extremely lucrative closed-source software on top. That way they get to deduct the core tech expenses from their taxes! What a deal!

This is stupid and the IRS is not allowing this anymore. This set us back by at least a year on our nonprofit journey, but it was a blessing in disguise: the 501(c)(6) is a vastly better category that gives us much more freedom in our activities and fewer restrictions on how we spend our money. And we can support for-profit projects (like File Pilot, for example) without worry.

What will the Foundation do?

The number one goal of the Handmade Software Foundation is to support, promote, and sustain the development of Handmade software.

In 2025 we saw the breakout success of File Pilot. If you somehow haven’t seen it, it’s a brilliant little file explorer for Windows, made by a single developer, that is a mere 2 MB and is shockingly fast. It has a completely custom UI and has so much love and attention poured into every pixel. And it came out at the perfect time, as Microsoft has continued to ship baffling bugs and horrible performance regressions month after month. They even messed up Windows Explorer’s performance, and then “fixed” it by preloading it in the background so the horrible performance wouldn’t be so obvious. Who would win: a trillion-dollar company, or one guy from Croatia who watched Handmade Hero? Obviously the Croatian.

File Pilot is an excellent template for what Handmade software should be, and how to launch it. We want to take the lessons learned from File Pilot and use them to launch more industry-redefining software.

Basically, the thinking goes that Handmade programmers have the technical chops to make amazing software, but don’t always have the aptitude or desire for the many, many other tasks that go into shipping. Payments, licensing, emails, support, design, marketing, testing, the list goes on. At the same time, we want to foster self-sustaining businesses. We feel we can bring people together to provide support and guide developers through that process.

Our core value is that we will never compromise on self-sufficiency. We will not lock you into a Handmade E-Commerce Platform; instead, we will give you the code and the resources you need to run it yourself. I’m excited to spend more time building these resources over the coming months.

How will the Foundation make money?

Memberships! You will be able to join the Handmade Software Foundation as a member for a monthly subscription fee, the exact pricing of which is yet to be determined.

Membership will grant you access to a private Discord channel with other members, access to the aforementioned business resources, and possibly more benefits down the line. We have other ideas for Foundation activities, but we don’t want to distract ourselves from our primary goal as we get the Foundation off the ground.

Can I join?

Not quite yet! We are actively building the payment processing infrastructure now and look forward to launching memberships as soon as possible.

In-person gathering this spring!

It’s been a while now since we got people together. We figure we should change that!

Later this spring, we will be hosting an in-person gathering in Vancouver, BC. Community member Matthew (AbjMakesAPizza on Discord) is a Vancouver native who kindly volunteered to organize an event. We’ve been looking at a variety of venues and have a few good leads, and we hope to have a final location and date soon. We’re looking to host up to 100 people or so, which we feel is a healthy number to get started with in our first year.

The plan is to host a “super-meetup”, of sorts: not a conference, but not just a meetup either. We want to create a space for demos, informal presentations, social gathering, jamming, and generally inspiring each other. Think a big room with lots of demo tables, a projector, a few chairs, and a mic. What’s on the agenda? Demos! Perhaps some lightning talks? That all remains to be seen as we find what the space will be capable of.

We’re targeting April or May, so although it’s somewhat short notice, we hope to give you all enough time to make travel plans. Stay tuned for more info!

Jams as usual

Finally, we’re planning to run two jams again this year. The topic for the first jam has yet to be determined; the second will be Wheel Reinvention as usual.

Unfortunately at this time we don’t yet know when these jams will be held. The timing will depend on our in-person gathering in Vancouver, plus any other events of interest to Handmade folks (such as another BSC). But, just to confirm, we are doing them :)

As for jam topics, I’ve been pondering how to keep the jams from getting stale. And I’m open to ideas! Should we give out prizes for different categories? Make people draw project ideas at random? Shorten the jams? Make people work in teams? In the end, I want the jams to spark new ideas and kickstart interesting projects, and that means we need to get creative juices flowing and get lots of talented programmers to participate. I’d love to hear your thoughts on Discord or in the comments below.

So long!

Look forward to more news next month! I’m committed to publishing one news post per month this year, for actual real this time. You may all heckle me if I fall behind.

May the Handmade community make a dent in the software industry in 2026!

Happy fall to everyone in Handmade! I hope you had a wonderful summer, and that you spent the perfect amount of your summer writing amazing Handmade software. Ideally you were actually outside enjoying the sun and the air while doing this, and ideally you climbed a mountain or jumped in a lake afterward—but if this is wishful thinking, then I hope you at least have some cool software to show for it.

Fall is a wonderful season for Handmade, because it means that we get to do another Wheel Reinvention Jam. And this is our fifth annual WRJ!

For me, the Wheel Reinvention Jam is one of the most important things we do as a community, because it reminds us that wheels do need to be reinvented, and that even the most ambitious projects need to start somewhere. I wrote this on the jam page, but wheel reinvention is in the very DNA of the Handmade community. It is also the value most commonly under fire from the rest of the software industry. Low-level programming—sure, that's important, that makes sense. But reinventing the wheel? How dare you? Don't you know how many smart people made those wheels??

My dream is to someday see a Wheel Reinvention Jam project grow and mature into a real product that changes expectations in the software industry. Handmade software can easily do that—just look at File Pilot or JangaFX. Maybe one of this year's projects will get there someday!

Anyway, I hope you join us in just a few short weeks. As usual, the jam is one week long, from Monday, September 22 to Sunday, September 28. All the details can be found on the jam's home page.

In other news, we are seeing an enormous surge in Handmade content right now—so much that I can't even keep up with it myself! Here are the big ones I'm aware of:

- The Better Software Conference in July was a smashing success, especially for a first-time conference. Their recordings are exceptionally well done and can be found on their YouTube channel.

- Nic Barker, best known for the Clay layout library for C, is releasing a programming fundamentals course called The Simple Joy of Programming. Unlike most programming courses, it builds its way up from assembly while still remaining extremely beginner-friendly. It is an absolute delight and I recommend subscribing to his Patreon for access.

- The Wookash Podcast continues to bring on amazing guests. Where else can you find a two hour long interview with Jimmy Lefevre about a Handmade replacement for Harfbuzz? Or a five hour long interview with Sean Barrett about software rendering?

- Casey himself is now a regular guest on The Standup, a show by popular programming content creator The Primeagen. It is a wonderful and very unserious thing, but also, Ryan Fleury was also just on the show to talk about the RAD Debugger, which is one of the most impressive pieces of Handmade software being developed today.

It's genuinely amazing to see so much of this content being put out into the world right now, and how much enthusiasm there seems to be for it. I know it will make a big impact on a lot of people!

Also, #project-showcase on the Discord is just crazy right now. Voxel engines, blog posts for SoME, indie games, videos on CPU microarchitecture, finger drumming trainers...this community is amazing as always. I never know what I'm going to see when I click into #project-showcase, but I'm always happy when I do.

There's one last thing I wanted to talk about: Abner Coimbre recently announced that he is shutting down his conferences. This means that Handmade Boston and Handmade Seattle are no more.

This was the right decision on Abner's part. Since we formally separated ourselves from Abner earlier this year, things have seemingly only gotten worse over there. His announcement post above only confirmed our decision to split, as the original version of the post was extraordinarily unprofessional. You can find my thoughts (and others') on the Discord in #network-meta if you prefer, but at this point, what matters is simply that Abner's conferences are over.

The obvious question that many in the community have been asking is: will the Handmade Network run its own event in 2026? The answer is: hopefully yes! At this stage, we are seriously considering it, looking into cities and venues, and discussing what such an event should look like. It will certainly look very different from a mainstream tech conference, for many reasons that I'll get into another time.

I hope to have more to announce soon. We want to make sure that this event is by the Handmade community, for the Handmade community, and a celebration of everything we stand for. And thankfully, the Handmade community is thriving. I'm glad to be a part of it as we go into Handmade's second decade.

See you all in a few weeks for the jam, and stay tuned for more news this fall!

-Ben

It's June 9, and that means that the X-Ray Jam has officially begun!

Participants have one week to make a project that exposes the inner workings of software. If this is your first time hearing about it, it's not too late to participate! Head over to the jam page to find more information about the theme, submission instructions, and inspiration to get you started.

After the jam, we plan to do another recap show like usual. The show is tentatively scheduled for Sunday, June 22, one week after the conclusion of the jam, but stay tuned for official confirmation.

We look forward to seeing all the updates this week!

-Ben

The official page for the X-Ray Jam is now online! Check it out, invite your friends, and join us on June 9 to dig into how software works.

The premise of the X-Ray Jam is to point an X-ray at software and see how it works "on the inside". It's a riff on the Visibility topic from previous years and a refinement of what made that topic interesting.

See, the original concept of "visibility" was not about "visualization"—the point was to make visible the invisible workings of the computer. To "visibilize" it, not necessarily "visualize" it. Obviously I love what the community chose to submit for those jams, but they admittedly did not stick to that topic very well. Even my own submission to the first Visibility Jam was a tool for automatically tracing a network, not really a tool to "make the packets visible" or whatever.

We were also looking back on last year's Learning Jam and trying to decide what to do with it. We liked the concept, and the community responded well to the opportunity to learn and share knowledge. But the format was weird (two weekends?) and three jams was a bit overwhelming even with the earlier start.

But the word "X-ray" perfectly captures the best parts of each. The best parts of "visibility" were the tools people built to explore data and understand their programs. The best parts of "learning" were the write-ups exploring whatever interested them. They complement each other well and it seems fitting to combine them into one theme.

I also think that X-Ray and Wheel Reinvention are perfect for each other too. If we want to challenge norms in the software industry, we have to build new software, but to build meaningfully different software, we have to build on meaningfully different foundations. The X-Ray Jam provides an opportunity for us as a community to branch out, deepen our understanding, and learn how things work at a lower level. The Wheel Reinvention Jam allows us to put that knowledge into practice.

It reminds me of the double-diamond design process, which I first read about in The Design of Everyday Things (great book!), and which I see applications for everywhere I look. Designing anything new requires divergence and convergence—fail to diverge, and you never leave the status quo; fail to converge and all you know is trivia. Repeating this process is how we achieve something better.

Anyway, I look forward to jamming with you all in June!

-Ben

Hello Handmade! 2025 is moving right along, and Handmade projects along with them. But first:

Introducing the X-Ray Jam!

We are doing two jams this year, and the first is a new jam we’re calling the X-Ray Jam. This is a riff on the “visibility” topic from years past that I’m very excited about.

In short: point an X-ray at your software! The purpose of the X-Ray Jam is to explore our systems and learn more about how they work on the inside. It combines the best parts of the Visibility and Learning jams into one. Here’s the details:

- When: June 9-15, 2025

- Topic: X-ray some program and figure out what's happening inside.

- You submit: A program or tool, like previous years, or a blog post, like in the Learning Jam.

For example, maybe you'd build a program to record and replay all the window messages received by your program. Or you'd investigate why the Windows 11 right-click menu is so slow to open. (What is it doing?!) Or perhaps you could hack up a compiler to log information about various decisions it makes or heuristics it uses. The topic is open to whatever inspires your curiosity.

Note that this jam is a week long, just like the Wheel Reinvention Jam. After some discussion and reflection, we’ve come to feel that one week is the ideal amount of time for our jams—enough time to explore a new topic, get confused, iterate a bit, and put it all together for submission. The general expectation would be: during the week, work in the evenings, and on the weekend, spend the whole day jamming.

We’re still working on the home page for the jam, but hope to have it up fairly soon. We’re also working on expanding the blog post capabilities of the website to make the submission experience more pleasant. Stay tuned.

Orca is coming along…

Martin recently posted a new update over on the Orca website, with some exciting updates about the project’s progress. Most notably, Martin has been hard at work on the debugging experience, and has implemented both source-level and bytecode-level debugging.

Not only will this make for an amazing development experience, but in a funny twist of fate, Orca may already have one of the most sophisticated WebAssembly debuggers on the market. Besides that, multiple community contributors have made great progress on the standard UI library and the build system. Go give it a read.

If you want a good programming podcast…

Last month we announced that Unwind is back. It is, I promise—we have another episode recorded—but unfortunately our previous editor wasn’t available and we have yet to find another. If you know a podcast editor, please send them our way (via [email protected]).

BUT, luckily, Łukasz of the Wookash Podcast has been interviewing just about everyone the Handmade community could ever want to hear from. Since starting up the podcast just last year, Łukasz has interviewed: Casey (of Handmade Hero fame, obviously), Ginger Bill (creator of Odin), Ramon Santamaria (creator of Raylib), Ted Bendixson (creator of Mooselutions, in two separate episodes!), and many other programmers well loved by the Handmade community.

And in fact, Vjekoslav (creator of File Pilot) will be on the podcast this Saturday! Enjoy this teaser, and go subscribe to the Wookash Podcast on YouTube to catch the episode when it goes live.

A leadership update

Sadly I must close out this news post with some less happy news: Colin Davidson has chosen to step down from his position as admin, in order to focus on various life matters. Don’t worry, he’s not going anywhere—he’ll still be part of the community, and for that I am very grateful. He also remains the treasurer of the Handmade Software Foundation, a role which exposes him to much less Discord drama 😛

So, for the time being, Asaf and I are running this thing ourselves. We should be able to get by, but as we ramp up the Foundation and continue to run various community events, we will be looking for others to help us out. Luckily, we are surrounded by talented, thoughtful, capable programmers from all walks of life, so I’m confident that we’ll be able to find exactly the people we need for our community initiatives to succeed.

Until next time!

-Ben

Hello Handmade community! I hope your 2025 is going brilliantly so far. I have a few key updates for you to kick off the year.

Unwind is back!

I am pleased to announce that, after a bit of a hiatus, our interview show Unwind is back. Our latest episode is an interview with Alex (aolo2), a web developer turned CPU engineer whose projects are a constant inspiration. We discuss the creation of his collaborative whiteboarding app, the Slack replacement he made for a previous job, and his latest project, a lightning-fast CPU trace viewer. You can watch the episode on YouTube.

For this re-launch of Unwind, we’ve changed the format of the show from live to pre-recorded interviews. It’s basically a podcast now instead of a live show, and we hope this will help us put out episodes more frequently and consistently. Personally, I’m very excited for this change. The old Handmade Network Podcast, hosted by Ryan Fleury, generated an incredible amount of conversation, reached a wide audience, and clarified what we care about as a community. Past episodes of Unwind had great content, but the format made it difficult to have the same kind of impact. We hope the podcast format takes us back to those old heights.

And speaking of the podcast, we have actually rebranded the Handmade Network Podcast as Unwind and are using the same podcast feed. If you had previously subscribed on any podcast platform, you should now see Unwind in your subscription feed. All the old episodes are still available too, so we hope you enjoy perusing the backlog. However, please be aware that some episodes (like the most recent one) are intended to be watched in video form, so we recommend watching on YouTube or Spotify. You can always find the audio feed at https://handmade.network/podcast.

The foundation is still in progress…

The Handmade Software Foundation is still in the process of being launched. After a failed attempt at creating a 501(c)(3), last year we submitted a new application to the IRS for a 501(c)(6). I believe this is a blessing in disguise—unlike a 501(c)(3), the 501(c)(6) designation allows us to explicitly focus on benefiting the software industry.

It seems the IRS has soured on software 501(c)(3)’s anyway. I blame OpenAI. But in fact, the tax code states that 501(c)(3) organizations must exist for educational, scientific, or charitable purposes, and it’s actually not clear that most software nonprofits fit those descriptions. In the year 2025, it seems clear that the mere act of releasing software for free is not particularly “charitable”, and the definitions of “educational” and “scientific” can only be stretched so far. After all, if your “charity” is funded by a big tech company who depends on your software for their business, are you really a charity or are they just avoiding taxes?

Now, a 501(c)(6) does not have the same benefits as a 501(c)(3). The largest difference for donors is that donations are NOT tax-deductible. However, as a 501(c)(6) we will still pay no income tax, meaning that 100% of your donation goes to us and our mission.

While we wait for the IRS to process our application, we’re still working behind the scenes to set up donations and make tangible plans to support Handmade software. You can view the website at https://handmade.foundation/, although it’s a bit of a work in progress and the specifics are subject to change.

We need your help to plan this year’s jams!

We got a late start on planning jams this year due to a pile of other important business. We’re still planning to run the Wheel Reinvention Jam this year like we always do, but we’d appreciate your feedback for what other jam or jams we should run this year. If you have any thoughts or ideas, please jump into the #network-meta channel in the Discord and join the discussion!

Thanks as always for being an amazing community. I’m proud to be part of it.

Ben

I regret to announce that the Handmade Network and Handmade Software Foundation will no longer be working with Abner Coimbre and Handmade Cities going forward.

This is obviously not a decision we made lightly. Since Handmade Seattle concluded just over two months ago, we have been attempting to come to an agreement that would allow us to continue working together. Unfortunately, it has become clear that Abner’s vision for Handmade has diverged from ours, and despite our best efforts, we have been unable to reconcile our differences.

This means that the Handmade Network will no longer support, promote, or endorse any Handmade Cities conferences or meetups. We disclaim all affiliation with Handmade Cities going forward. Community members are of course still welcome to attend Abner’s events if they wish, but with the understanding that we have zero influence over any content, logistics, or attendee experience.

How did we get here?

I realize this announcement may come as a shock, especially given that just two months ago we published a post titled The Next Ten Years, where Abner and I spoke optimistically about our partnership. That post was authored over the months between our summer meeting and Handmade Seattle in November. Unfortunately, in what has become a distressing trend, Handmade Seattle fell far below expectations, and we and many community members were shocked by some of the content Abner chose for the conference—in what was billed as the 10-year anniversary of Handmade, no less. Abner’s response to community feedback afterward only intensified our concerns.

We have been trying for the past two months to work with Abner to resolve these concerns. Unfortunately, we have been entirely unable to come to any kind of mutual agreement, despite some contrary statements by Abner in prior months.

We considered and discussed many possible approaches that would allow us to continue working together in some form. We have always valued events that bring the Handmade community together in real life. In the end, though, it has become clear that Abner’s goals for Handmade Cities are very different from our goals for the Handmade Network and Handmade Software Foundation, and that he prefers to run his business without our input. Therefore, we have concluded that it is best to simply part ways.

Moving forward

I am deeply sad that it has come to this point. I will forever be grateful to Abner for taking the initiative to bring the Handmade Network together, and for having the vision to imagine that a community of talented, fearless programmers could make a real difference in the world of software. And I will forever be grateful to him for bringing me into Network leadership in 2018, and giving me the chance to lead the community that made me who I am as a programmer. But as the lead of the community, I am forced to make hard decisions about its future, and this has been one of the hardest.

Although we on the Handmade Network team strongly disagree with Abner about the purpose and goals of Handmade, we nonetheless wish Abner the best, and hope that he finds success in his indie endeavors. We will not be hosting any kind of official in-person events in 2025, but discussions are ongoing for 2026 and beyond.

If you wish to discuss this news with me, you can DM me any time on Discord, or send me an email at ben @ handmade.network. It’s a busy time of year for me, but I will do my best to reply in a timely manner and to be as transparent as I can.

In the end, though, we don’t want this to drag us down. Our plans for the Handmade Network in 2025 remain unchanged, and I still stand by the content of The Next Ten Years. We are eager to put this behind us and spend 2025 building the Handmade Software Foundation, running jams, and supporting all the wonderful members of our community.

We’ll publish another news post soon outlining our plans for 2025 for both the Network and the Foundation. I am still incredibly excited about the next ten years of Handmade!

-Ben

This post has been co-authored by Abner Coimbre and Ben Visness.

On November 17, 2014, Casey Muratori went live with the first episode of Handmade Hero. The show was immediately electrifying: a game industry veteran sharing his knowledge with no coddling and no compromises. But Handmade Hero gave us more than just technical knowledge—it gave us an ethos for how to program.

10 years later, it’s clear that Handmade Hero was more than just a show—it started a movement. The Handmade community has grown to encompass thousands of people sharing their knowledge on Discord, attending conferences and meetups, shipping apps, and working to fix the mess that is modern software. In a world where most programming communities are built around a particular language or paradigm, the Handmade community is an anomaly, a place where brilliant programmers of all disciplines gather to help each other make truly great software.

This past July, we spent a week at Ben’s family cabin to reflect on the past ten years of Handmade. The key question: What should we encourage ourselves, and the community, to do for another ten?

Three Handmade hubs

First, we should review where we are now. For better or for worse, the Handmade community is under the stewardship of three separate entities:

- Handmade Hero: the show that started it all, by Casey Muratori

- Handmade Network: the online community, led by Ben Visness

- Handmade Cities: in-person events like conferences and meetups, by Abner Coimbre

At the time of writing, Handmade Hero is paused. Casey will share further updates down the line about whether he will continue the series or introduce a new one that better meets the educational needs of his current audience.

But the Handmade Network and Handmade Cities march on. The Handmade Network now runs three unique programming jams per year, has a thriving online community, and runs Unwind as a way of sharing the community’s knowledge. Handmade Cities has expanded to both Boston and Seattle, supports meetups across the world, and now hosts coworking groups and hangouts as well.

These are all amazing developments, but we don’t want to stop there. We want to take the Handmade community to the next level and really make a dent in the software industry, as has been our mission from the start. How do we achieve that? What can we do to scale up the Handmade movement and its impact in the world of software?

Our overarching goal is simple: we want Handmade programmers, and the software they write, to be successful. Straightforward enough. But what’s holding Handmade programmers back from that today, and what can we actually do to help them?

First we need to understand who Handmade programmers really are.

Three kinds of Handmade programmers

The boat ride up at the cabin yielded our first main conclusion: there are multiple kinds of Handmade programmers, each with different needs.

We’ve had ten years to observe and work alongside dozens, if not hundreds, of incredibly competent programmers. As we reflected on the best of the community, we noticed that they seem to fall into three major archetypes, which we are dubbing:

- The Entrepreneur

- The Researcher

- The Craftsperson

The Entrepreneur is someone who wants to ship Handmade software. These are programmers like Vjekoslav (creator of File Pilot), Wassimulator (creator of Cactus Image Viewer), or Nakst (creator of the Essence operating system). Their goal is to build the kind of software they know computers are capable of, and they are usually driven by the awful state of software today and motivated to create something better.

The Researcher is someone who wants to do ambitious work and share what they learn with the rest of the world, but their goal isn’t necessarily to make a product. Instead their goal is to dig deep into systems, try new ideas, and share what they learn with the rest of the world. We have a few programmers that fit this mold, such as Allen Webster, creator of Mr. 4th Lab, and BYP, who is always down some kind of interesting rabbit hole.

The Craftsperson is someone who just loves the work of engineering. Their love of their craft gives them a wide breadth of knowledge and experience, and they are less distracted by shiny new ideas and tend to be extremely productive as a result. We have many incredible craftspeople in the community, such as Reuben Dunnington (who contributed an entire libc implementation to Orca), and Skytrias, who has contributed large amounts of code to both the Odin and Orca projects.

These are broad categories and there are many community members who blur the lines. But we think these categories nonetheless describe most of the people in the Handmade community, and they inform what we can do to help them.

So how do we support Handmade programmers? Each archetype has a different set of needs:

- Entrepreneurs need product infrastructure: websites, payment processing, licensing systems, update delivery, email support, etc. They also need labor, a network of programmers who can contribute to their projects. And of course they need marketing; it shouldn’t be too surprising that low-level programmers are usually not great at advertising.

- Researchers need funding; at least enough to motivate them to do their research and to account for the fact that they likely won’t have a product to pay their bills. Perhaps more importantly, though, they need PR: for their research to make an impact, it needs to be published somehow, and people need to be aware of it. They also might benefit from project management: an outside voice helping them find meaningful goals and milestones that can be shared widely.

- Craftspeople need work; this means both a supply of interesting projects to work on and compensation for their efforts. They also deserve recognition: while many craftspeople don’t want the spotlight, it’s important for their work to be properly appreciated.

There are, obviously, a lot of different needs here. We may not be able to achieve them all right away, but our overall vision for the future of Handmade is to fulfill these needs for the community.

The Handmade awakening

Those are the tangible needs, but before going further, we should actually cover programmers’ emotional needs as well. It may sound strange, but this is actually an issue of growing importance for the community. (This realization came after some post-boat-ride Chocovine.)

At the heart of the issue is an awakening that nearly all Handmade programmers experience. Abner experienced it when he took his job at NASA. Ben experienced it when he read Casey’s article about compression-oriented programming. There are hundreds of Handmade programmers with a similar story, where they have a realization, an awakening, about what programming really is.

We started out as children writing programs for fun. We loved programming and loved making computers do amazing things. But then we went to school, and the joy of programming was slowly drained away. We were taught that it was foolish to write programs yourself, and that you should instead use the library or the game engine that someone smarter had written. We were taught about inheritance hierarchies and design patterns and hundreds of other useless concepts that obscure what the program is actually doing. And when we asked “why”, we were again told that it’s just how things work. Trust the experts. This is how software is made.

Then we found Handmade. We discovered that programming could be fun again. We discovered that there were programmers out there who cared about their craft. We discovered that all the fluff we were taught in school was only making things worse, and that if we instead learned how computers actually worked, a whole new world of programming would open up for us.

The obvious corollary, though, is that the software industry is a disaster. It seems too absurd to be true—surely there must be a good reason why so many programmers install is-number from npm. Surely there must be a reason for a to-do list to use 500MB of memory. Surely so many smart programmers couldn’t be wrong! And yet they are.

Ten years into their programming journey, many Handmade programmers face a crisis. They have grown so much as programmers, and learned so much about how things work, that they can’t stomach working in the industry any more. The thought of installing hundreds of dependencies and slapping together a React app is too much to bear. The money may be good, but if the work isn’t fulfilling, they can’t do it.

The need to fix the software industry is clearer than ever. It’s not enough to just show programmers the way. We need to change the status quo.

How to support their needs

How does one change the status quo? The only way is to fill the software industry with the kind of software we want to see.

To that end, the Handmade Network team is in the process of creating the Handmade Software Foundation, a 501(c)(6) nonprofit organization whose goal is to sustain the development of Handmade software and Handmade programmers. The needs of entrepreneurs, researchers, and craftspeople are varied, but we think we can make a big impact with just a few key people. One designer can offer their services to many entrepreneurs’ Handmade products. One “project manager” can meet regularly with the authors of official foundation projects, and one writer can help with a variety of blog posts, documentation sites, and grant proposals. And foundation projects can be an amazing source of work for the Handmade craftspeople who are looking for meaningful work. It will take some time to get going, but we think there’s huge potential as an “incubator” of sorts for all kinds of Handmade projects.

Meanwhile, Handmade Cities is all about growing the in-person events, with a focus on connecting programming in real life. We host two main conferences: Handmade Boston in the summer for masterclasses and Handmade Seattle in the fall, which is our flagship event with talks, demos and even a job fair. We also run monthly meetups around the world with trusted hosts, plus bi-weekly co-working sessions and weekly hangouts. All of this builds on the idea that real change in the software world comes from in-person connections with like-minded people.

Over the years, Handmade events have proven to find people new jobs, co-founders, lifelong friends, and even romantic partners. Our conferences are an anchor to the Handmade community and a platform for pushing forward programming projects.

How programmers can support us

The Handmade Software Foundation is still getting off the ground, and the process will take a fair amount longer. The Handmade Network team already spent a year waiting for the IRS to review a previous application, only for it to be rejected, and the entire process to start over. The end result will be worth it, but these things unfortunately take time. In the meantime, we encourage you to sign up for the email newsletter, and to start participating in the community. We look forward to scaling up Foundation activities in the coming years, and if you find this exciting, please feel free to ping Ben on Discord (bvisness) or send him an email at [email protected].

Handmade Cities, however, is already an active company run by Abner. All Handmade events are 100% indie, meaning Abner doesn’t take any sponsorship deals: he is funded by individuals like yourself. Organizing is his full-time career for the last couple of years which pays significantly less than any traditional programming job, so the best way to support Abner is throwing five bucks his way or getting tickets for the conferences. If you need to get a hold of Abner, ping him on Discord (abnercoimbre) or send him an email at [email protected].

The first 10 years of Handmade have had more of an impact on our lives than we ever could have imagined. We’re both so proud of everything the community has already accomplished, how many people it’s impacted, and the software it has produced. We are all better programmers, and better people, because Casey decided back in 2014 to share his knowledge and his ethos with the world.

We couldn’t be more excited about the next 10 years.

-Abner and Ben

The past few months have been a whirlwind. We hosted two jams: the Visibility Jam and the Wheel Reinvention Jam, almost back to back, each with an associated recap show. (We’ll schedule them better next year!)

In between was Handmade Boston, which was a delight—I met so many wonderful people, some of whom even submitted projects for the jam. And throughout it all, we made a slew of website updates, including an overhaul to our Discord integration.

Finally, we have been working with Abner and his team recently to improve the Handmade Cities website. The Handmade Network community scoured the internet to put together this master spreadsheet of all Handmade Cities media since the first conference in 2019. Asaf has mapped out the full sitemap of Abner’s current site and has been working with Devon to prioritize and port the contents of the site to the new one. I’m very excited for the end result, but my excitement has been tempered by recent events—events which provide a stark reminder of why the Handmade ethos is so important.

How stable is your platform?

Here’s the thing: Abner’s site currently runs on WordPress.

Those following the tech sphere will know that the WordPress ecosystem is on fire. Matt Mullenweg, director of the WordPress Foundation and CEO of Automattic, has decided to wage war against a WordPress hosting company called WP Engine. He has publicly called the company "a cancer to WordPress", blocked them from accessing core WordPress infrastructure, and even seized one of their popular plugins. All of this stems from an ostensible infringement on WordPress’s trademark, but the details make it look more like extortion than a trademark dispute.

This is not just drama. Nearly a tenth of Automattic’s employees have already resigned, and I expect more will follow. Mullenweg’s dick-measuring contest with DHH surely won’t help his case. (The post used to say "We’re now half a billion in revenue. Why are you still so small?") WordPress's executive director has resigned, as has most of the company's ecosystem division. What does this mean for the future development of WordPress, or the health of the developer ecosystem surrounding it? Time will tell, but it's a very bad sign for those who have built their businesses on the platform.

The situation is still developing, and information online is constantly being edited or deleted. By the time you read this article, you might need to look up some of the links on the Internet Archive.

Oh wait. That's under attack too.

Until recently, if you wanted to throw together a website, WordPress seemed like a sensible, stable choice. I've personally made multiple WordPress sites that have held up for years, if not decades. But thanks to one man, its future is now in jeopardy, and even the services that would act as a backup are disintegrating too.

A safe haven

This website, on the other hand, is proudly Handmade. The whole codebase is a single application written in Go. It statically links its dependencies. Our data is stored in a Postgres database on the same server. We use a few libraries here and there for syntax highlighting and Markdown rendering, but for the most part we just depend on the standard library HTTP server.

This very post can be authored in Markdown, previewed in real time, and published instantly. It has a full revision history, permalinks, the works. We have a project system, a shadowban system to combat spam, and a robust Discord integration that powers the amazing showcase feeds across the site. The previous admin team did a great job building the foundation, and Asaf and I have rebuilt, extended, and refined it over the past several years. Every link to handmade.network still works, and will always work, for as long as we are around to maintain this site, and when someday we hand this off to another team, they'll have one simple database full of well-organized content.

Was this site hard to build? Kind of. Certainly it took us months to rebuild it from scratch back in 2021, and we've poured many more months into its development since. But this entire site was built by a mere handful of devs in their spare time. It's just a database with posts, threads, and projects, and a bunch of CRUD pages for managing them. Nothing about it is very complicated.

But thanks to our efforts, we are safe. Nobody can take away our platform, because we built our platform. As long as we can connect a computer to the internet, we'll be able to keep this site online.

Self-sufficiency is not selfish

So with Handmade Seattle approaching, we have redoubled our efforts. Being self-sufficient isn't just about protecting ourselves from the Matt Mullenwegs of the world. It's about empowering others to sustain themselves too.

What we have learned from building the Handmade Network website enables us to build websites for others. Abner's will be the first, but as we spin up the Handmade Software Foundation, we expect to make many more, each specifically tailored to each author's needs. There's no reason for a website to be complicated—each one can be straightforward and simple. And if a project author decides they don't want our help any more? They can just take our code and run it themselves.

We hope this is a model for the whole Handmade community: a group of self-sufficient programmers working together to empower each other. By taking the responsibility on ourselves, we can build better software and share what we learn with the world.

The first step to building a new future for the software industry is to build tools for ourselves. This is why we do jams, this is why we do Unwind, this is why we do conferences. Handmade programmers need to lead the way by proving how much a few programmers can do, and how much better your software can be when you build it by hand.

See you all at Handmade Seattle in November.

-Ben